The Genius of Olaf Stapledon’s Star Maker

There is a great YouTube channel I recommend for sci-fi enthusiasts, Bookpilled. Its anonymous host, a “digital nomad”, has reviewed several hundred science-fiction novels, in 86 videos over the last two years. Almost all come from what he calls “the pre-bloat era” — the 20th Century. I really like the discrimination, word choice, emotion, expressions, and insights he brings to his reviews. I also appreciate his thoughts on the evolution of sci-fi as a genre, and on the great value of literature in general. Check out his channel and see if you like it. Consider supporting his Patreon if you do. He makes $830 a month there at present. That is a testament to the value of his reviews, but not yet a living wage, which I hope he’ll get soon.

Bookpilled recently posted a five minute review of Olaf Stapledon’s Star Maker, 1937. Star Maker is one of the foundational books of science fiction, and still one of the most visionary. In cosmic science fiction, it is the ur-book (the earliest, the original), the one from which all others sprang. What I particularly like about this polymathic and philosophical book is that it predicted the evo-devo nature of the universe, at least as I see it.

His review of Star Maker is the middle one in the set of three in the video below. Here is a link if you want to skim directly to it.

His review inspired me to share my own thoughts on this great book, here on my blog and over on Medium. I hope you like them. As always, please let me know what you think as well, either in the comments, or privately at johnsmart at gmail, as you prefer.

This is a beautiful and honest review of Star Maker, 1937, one of the foundational novels of science-fiction. Every reader deserves to know that Arthur C. Clark, one of the greatest sci-fi novelists of the 20th century, who was also a scientist and a futurist, was deeply influenced by this book. He called it “probably the most powerful work of imagination ever written.”

I bet Clark, a great world builder himself, realized that Stapledon’s main ideas in this sweeping book, that of a noosphere developing, for Earth and for the Cosmos, one that must progressively integrate and regulate all its diverse minds and cultures, and of the multiverse as both the source of our present universe, and the place intelligence will go when this universe dies, as it must, was the most elegant model that philosophers have yet proposed for the nature and future of our universe.

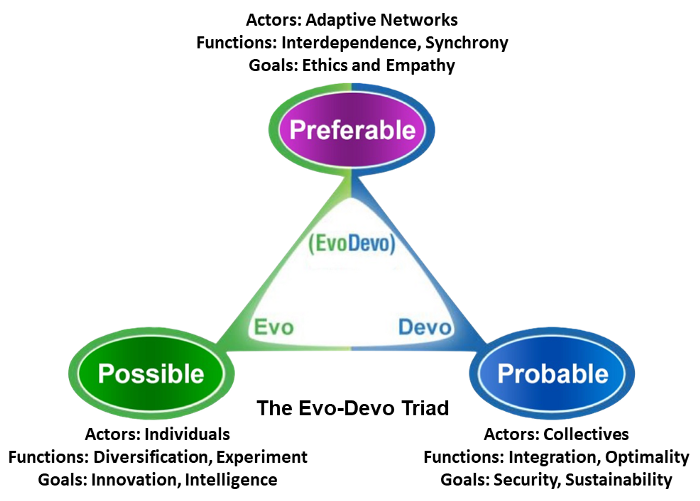

In my view, the Stapledonian combination of the Noosphere, the Cosmosphere (his unique contribution), and the Multiverse together remain the best model for the nature and future of our universe as a complex adaptive system, if it is both an evolutionary and developmental (“evo-devo”) system, replicating under selection in the multiverse. For one modern scientific hypothesis, and early evidence from parametric tuning and black hole dynamics, of our universe as a replicator in the multiverse, see Lee Smolin’s Cosmological Natural Selection hypothesis, and his excellent book, The Life of the Cosmos, 1999.

Isaac Asimov, perhaps the only other other contender for the 20th century’s best combination of sci-fi novelist and science writer, also thought Star Maker was truly special. I’d argue that Asimov’s most brilliant and famous short story, The Last Question, 1956, was influenced by this book. Apparently one of his last sci-fi books, Nemesis, 1989, on networked consciousness, was also directly influenced by Stapledon’s book.

Thank you for sharing your view that the beginning and end of Star Maker were the best parts, for you, and that most of the rest was a painful slog to finish. Such opinions are very helpful to “skim readers”, those of us that use skimming and scanning techniques to read our novels, rather than reading them word for word. See my Appendix to this article for those who want some tips on skim reading, and thoughts on its future.

For those who don’t plan to read this important but difficult book, let me recommend the Wikipedia plot summary of Star Maker. For the vast majority of us, I think a plot summary is all we need from books like Star Maker, along with a few reviews of its impact and value, like this one. Also, as a wonderful recent development, we can now talk to a good large language model, like Chat GPT about any book, and ask the AI questions about it, and summaries of its key ideas. I also recommend donating money to Wikipedia occasionally as well, and paying, if you can, for the best commercial LLMs (Chat GPT 4, today).

Wikis and LLMs are presently very limited but also very important aspects of our emerging noosphere (group mind), one of the topics Stapleton writes so cosmically about.

If you’re curious to read more about the development of the noosphere, and want to start with the ur-book in nonfiction, let me recommend The Human Phenomenon (mistranslated in English as “The Phenomenon of Man”), 1955, by the Jesuit priest, philosopher, and paleontologist Pierre Teilhard de Chardin. Interpret his Christian beliefs figuratively, rather than literally, and you have a brilliant first take on the increasing empathy, ethics, and consciousness of adapted complexity in our universe, hypothesized as a plausible universal developmental process, under evolutionary selection.

Many other works have been written since, in fiction and nonfiction, that explore how this noosphere may develop. The futurist Gregory Stock’s Metaman: The Merging of Humans and Machines into a Global Superorganism, 1993, is a very good nonfiction update, with a good history of those who have speculated on the nature of the future human superorganism. So are the works of the great systems theorist Fritjof Capra, especially The Web of Life, 1997, and The Systems View of Life, 2014.

Sadly, few academics have gotten funds to study this topic in the seven decades since Teilhard’s books. I think this is because the whole idea of a developmental noosphere seems just too positive and convenient for human minds to accept it as likely. We’ve been selected by evolution to think first and deepest about the negatives, about all the ways things might fail. Dystopias outsell protopias by roughly ten to one, in my estimation.

In 2008, philosopher Clement Vidal and I founded a community, Evo-Devo Universe, to explore the idea of the universe as an evolutionary and developmental system. Much has been written about the universe as an evolutionary system, a universe that unpredictably explores, creates, and gets more diverse in many local ways. There is much less written, in recent decades, about the universe as a developmental system, a universe that constrains, conserves, integrates, grows, matures, and follows a predictable life cycle, as a system. We all both evolve and develop, as living systems. Doesn’t it make sense that the universe might do this as well?

If our universe both evolves and develops, then a coming noosphere on Earth, a great diversity of noospheres on other Earthlikes, an eventual cosmosphere that integrates these noospheres, under dynamics of cooperation, competition, and selection, an eventual end to this universe, and a new beginning in the multiverse all make sense, from a systems perspective. These are big ideas. They need a lot of testing, and better science, simulation, physics, and information theory than we have today.

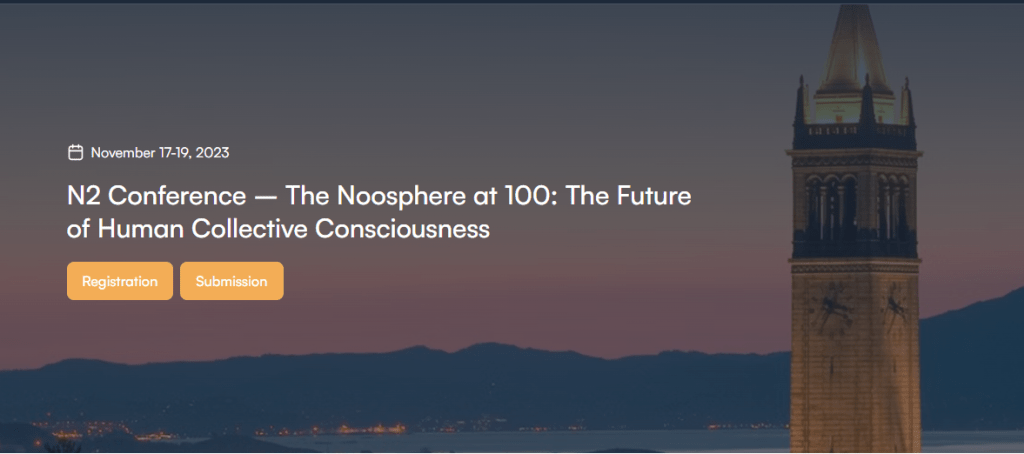

Fortunately, there is a new conference, partly developed by my colleague Clement Vidal, that will explore the idea of the noosphere later this month. It is funded by a visionary philanthropist, Ben Kacyra, founder and president of the nonprofit research organization, Human Energy, that will explore many dimensions of the noosphere concept at UC Berkeley later this month.

The Noosphere at 100, November 17–19, 2023, honors the fact that it has been 100 years since Teilhard de Chardin first wrote about the noosphere idea. It includes a number of fantastic speakers including Terrence Deacon, Francis Heylighen, Shiela Hughes, Kevin Kelly, Robert Kuhn, Jennifer Morgan, Greg Stock, Brian Swimme, Clement Vidal, David Sloan Wilson, Claire Webb, and Robert Wright, who have written about the idea. Best of all: you can watch it for free online. That’s noospheric thinking!

I’m happy to announce that I’ll be presenting a poster at this event. If you want an overview of the topics I’ll cover, let me recommend my talk, The Goodness of the Universe, 2022 (80 mins, YT). I also got into a great long conversation on this topic over on the space discussion community Centauri Dreams in 2021.

I consider the emergence of a global superorganism by far the most probable and necessary story for the future of intelligent life on Earth. A superorganism that incorporates all humans and their institutions as its “cells”, “tissues”, “organs” and “networks” integrating them into a diverse yet unified entity. The head of that superorganism must be a hive mind (collective intelligence), one that allows individuality, disagreement and conflict, just as we argue with ourselves all the time, using diverse mindsets, yet a mind that also has an integrated and higher consciousness, with a unitary sense of self. Just like us.

How could it be otherwise? How else will we get the kind of safety and interdependence we need, in a world with continually accelerating individual power and ability?

The subtle values shifts in our global public that is happening now, as we all get more interconnected, is weak signal of the coming collective consciousness. The modern generation puts empathy and ethics first, even as outdated political, economic, and social structures and traditions keep them from changing the system as rapidly as it should. There are many new problems of progress that we must address, including corrupting levels of wealth, and digital systems that are still very dumb, unable to be used to guide us to truth and evidence. But we can overcome these problems, with hard work and vision. I’d love to hear your views.

This noospheric future has seemed highly likely to me ever since I first considered the nature of accelerating change in high school. In a key corollary, as advanced civilization minds inevitably meet other civilization minds in coming generations, through whatever mechanisms are most efficient — I argue in my own papers a likely migration to inner space, and black hole like environments — they’ll likely undergo the same merger process, increasingly unifying the Cosmos. Stapledon offers the first fictional version of a cosmosphere in Star Maker. It’s exhilarating to think that our drive to connect with others may one day reach such scale.

But it is also critical to recognize that even if a global superorganism is our highly likely fate, if we are to survive, this does not mean that our particular species will get there, or even if we do, that we will get to that positive emergence in a particularly ethical, empathic, and life-affirming way. The evolutionary paths we choose to take to this common developmental future will surely be quite different on each planet. While development is predictable, evolution is the opposite. It is the evolutionary paths that we take, not the developmental destiny we face, that are the essence of our individual and collective freedom, responsibility and moral choice.

Just knowing that a particular future–the global superorganism–is highly likely or inevitable, in no way absolves us of the great challenges ahead in getting there in the most humanizing ways that we can. But at the same time, knowing where we are ultimately going is a huge foresight advance, as it can focus our strategies and efforts on the most productive and positive-sum goals, the ones the universe appears to be nudging us toward, by its very nature, as a developing system, based on fixed, finite, and highly-tuned physical and informational parameters. Again, just like us.

For those interested in evo-devo models of our universe, let me recommend our research and discussion community for publishing scholars in relevant disciplines, Evo-Devo Universe, which I co-founded with philosopher Clement Vidal in 2008.

My own views on the universe as an evo-devo system can be found in the following papers:

Answering the Fermi Paradox: Exploring the Mechanisms of Universal Transcension, 2002

Evo-Devo Universe? A Framework for Speculations on Cosmic Culture, 2008

The Transcension Hypothesis: Why and How Advanced Civilizations Leave Our Universe, 2012

Humanity Rising: Why Evolutionary Developmentalism Will Inherit the Future, 2015

Key Assumptions of the Transcension Hypothesis, 2016.

Evolutionary Development and the VCRIS Model of Natural Selection, 2018

Evolutionary Development: A Universal Perspective, 2019 (Web) (PDF)

Exponential Progress: Thriving in an Era of Accelerating Change, 2020.

To everyone reading this: Thanks for all you do!