I have a new interview, Foresight: Your Hidden Superpower, (YouTube, Spotify, Apple) with Nikola Danaylov of the Singularity Weblog. Nikola has done over 290 great interviews. They are a rich trove of future thinking and wisdom, with acceleration-aware folks like Ada Palmer, Melanie Mitchell, Cory Doctorow, Sir Martin Rees, Stuart Russell, Noam Chomsky, Marvin Minsky, Tim O’Reilly, and other luminaries. As with my first interview with him ten years ago, he asks great questions and shares many insights.

We cover a lot in 2 hrs and 20 mins. Below is an outline of a dozen key topics, for those who prefer to skim, or don’t have time to watch or listen:

- We discuss humanity as being best defined by three very special things: Head (foresight) Hand (tool use) and Heart (prosociality). We talk about how these three things were critical to starting the human acceleration, with our first tool use (in cooperative groups), and why foresight, of all of these, is our greatest superpower. An author who really gets this view is the social activist David Goodhart. I recommend his book, below.

- We discuss human society as an awesomely inventive and coopetitive network. We are selected by nature to try to cooperate first, and then compete second, within an agreed-upon and always improving set of network rules, norms, and ethics. What’s more, open, bottom-up empowering, democratic networks, like the ones we are seeing right now in the West’s fight in Ukraine, increasingly beat closed, top-down autocratic networks, the more transparent the world gets. We talk about lessons from the Ukrainian invasion for the West, Russia, and China.

- We discuss why a decade of deep learning applications in AI gives us new evidence for the Natural Intelligence hypothesis, the old idea (see Gregory Bateson) that deep neuromimicry and biomimicry (embodied, self-replicating AI communities, under selection) will be necessary to get us to General AI, and is likely to be the only easily discoverable path to that highly adaptive future, given the strict limits of human minds.

- We talk about what today’s deep learners are presently missing, including compositional logic, emotions, self- and world-models, and collective empathy, and ethics, and why the module-by-module emulation approach of DeepMind is a good way to keep building more useful, trustable AI. Mitchell Waldrop’s great article in PNAS, What are the limits of deep learning?, 2019 says more, for those interested.

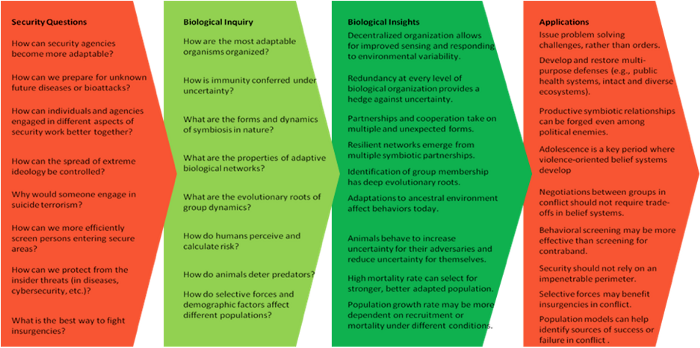

- We discuss the Natural Security hypothesis, that we’ll get security and goals alignment with our AIs in the same way we gained it with our domesticated animals, and with ourselves (we have self-domesticated over millennia). We will select for trustable, loyal AIs, just as we selected for trustable, loyal, people and animals. The future of AI security, in other words, is identical to the future of human security. We will need well-adapted networks to police the bad actors, both AI and people. Fortunately, network security grows with transparency, testing, proven past safe behavior, and perennial selection of safer, more aligned AIs. There is no engineering shortcut to natural security. I feel strongly that human beings are not smart enough to find one. For more on this, you may enjoy the work of the late, great biologist Rafe Sagarin, summarized for a Homeland Security audience in this slide below.

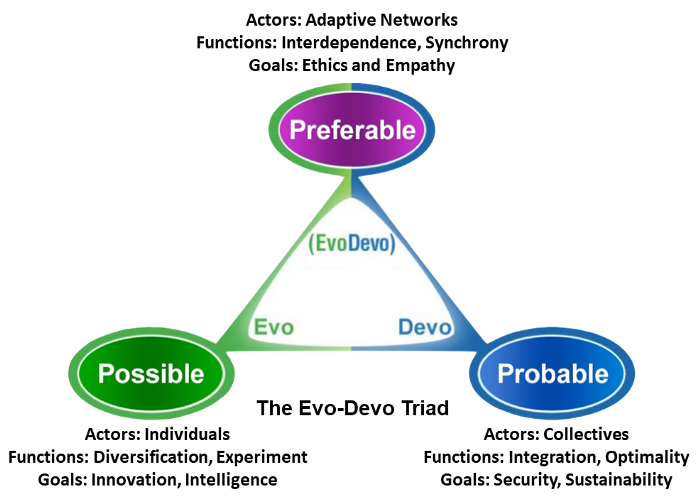

- We talk about the philosophy of Evolutionary Development (Evo-devo, ED), which looks at all complex replicating systems as being driven by both evolutionary creativity and developmental constraint. We discuss why both processes appear to be baked in to the physics and informatics of our universe itself. Quantum physics, for example, tells us that if we act to determine the value of one variable at the quantum scale, the other becomes statistically uncertain. Both predictability and unpredictability are fundamental to our universe, and they worked together, somehow, to create life, with all its goals and aspirations. How awesome is that?

- We describe how this evo-devo model of complex systems tells us that the three most fundamental types of foresight we can engage in are the “Three Ps”: thinking and feeling about our Probable, Possible, and Preferable futures. We explore why it is often best to make these three future assessments in this order, at first, in order to get to our most adaptive goals, strategy, and plans.

- We talk about how, unlike what many rationalists think, our universe is only partly logical, partly deterministic, and partly mathematical. Turing-complete processes like deduction and rationality can only take us so far. We actually depend the most on their opposite, induction, to continually make guesses as to the new rules, correlations, and order that are constantly emerging as complexity grows. What’s more, we use deduction and induction to do abduction, to create probabilistic models, and to analogize. Abduction is actually the most useful, high-value form of human thinking. Deduction and rationality are in perennial competition with induction and gut instinct, and the latter is usually more important. Both are critically necessary to doing better model making and visioning. If we live in an evo-devo universe, this will be true for our future AIs as well. It is always our vision, of both the preferred and the preventable future (protopias and dystopias) that helps or hurts us most of all.

- We describe intelligence as being inherent in autopoesis (self-maintenance, self-creation, and self-replication). Any autopoetic system is going to have, by definition, both evolutionary and developmental dynamics. The system’s evolutionary (creative, unpredictable) mechanisms will guide its exploration, creativity, diversity, and experimentation. The developmental (conservative, predictable) mechanisms will guide its constraint, convergence, conservation, and replication, on a life cycle. The interaction of both dynamics, under selection, creates adaptive (evo-devo) intelligence. Intelligence, and consciousness, work to “knit together” these two, opposing dynamics, in an adaptive network. In my view, machines will need to become autopoetic themselves if they are to reach any generality of intelligence. A cognitive neuroscientist who largely shares this view is Danko Nikolic. His concept of practopoesis (though it does not yet include an evo-devo life cycle) is quite similar to my views on autopoesis. I recommend his 2017 paper on the design limits of current AI, in terms of levels of learning networks. I’ll explore his work in my next book, Big Picture Foresight.

- We talk about today’s foresight, and how natural selection has wired us to continually predict, imagine, and preference milliseconds to minutes ahead. The better we get at today’s foresight, the better we get at short-term, mid-term, and long-term foresight. Today’s foresight, the realm of our present action, is both the easiest to improve and the most important to practice. I go into the psychology and practice of foresight in my new book, Introduction to Foresight, which we discuss in this interview. If you get a chance to look it over, please tell me what you think, and how I can improve. I greatly appreciate your feedback and reviews.

- We also talk about a number of other future-important topics, including Predictive and Sentiment Contrasting, the Four Ps (our Modern Foresight Pyramid), Antiprediction Bias, Negativity Bias, why and how accelerating change occurs (Densification and Dematerialization), the Transcension Hypothesis, Existential Threats, why it makes sense to Delay Nuclear Power, to prevent a weapons proliferation dystopia (see my new Medium article on this topic), our potentially Childproof Universe, and the Timeline to the Singularity/GAI (2080, in my current guess).

- My concluding message is that regardless of what you hear in the media (due to both negativity and antiprediction bias) our networked evo-devo future looks like it is going to be a lot more amazing and resilient than we expect, that in life’s history so far, well-built networks always win (and are immortal, unlike individuals) and that foresight is our greatest superpower. The more we practice it, the better our own lives and the world gets. Don’t believe me? Are you worried about tough, long-term global problems like climate change? Watch evidence-based, helpful, and aspirational videos like the one below, from Kurgesagt . Positive changes and great solutions are continually emerging in our global network. We all just need to better see those changes and solutions, so we can thrive. Never give up on evidence-seeking, hope, and vision!

To say this all more simply: #ForesightMatters!

NOTE: This article can also be found on my Medium page, the best place to leave comments and continue the discussion. This site has become a legacy site, because WordPress still doesn’t pay its authors, and it still has very primitive software. For example, all the formatting errors on this post do not show up in the edit window of their new Gutenberg editing software, after pasting in good code from Medium, and I have no idea how to fix them. Sorry!

John Smart is a global futurist and scholar of foresight process, science and technology, life sciences, and complex systems. CEO of Foresight University, he teaches and consults with industry, government, academic, and nonprofit clients. His new book, Introduction to Foresight, 2022, is available on Amazon.